Data synchronisation and technical aspects of customer segmentation

Strong understanding of audience segmentation, extensive expertise analysing and segmenting CRM datasets, knowledge of data segmentation, and experience offering personalised content. These are some instances of the most typical specifications included in job offers for CRM/lifecycle marketing. Although the idea of client segmentation is very straightforward and simple to grasp at first, being an expert in this field necessitates delving into technical specifics. We hope that this information may assist you in making this jump.

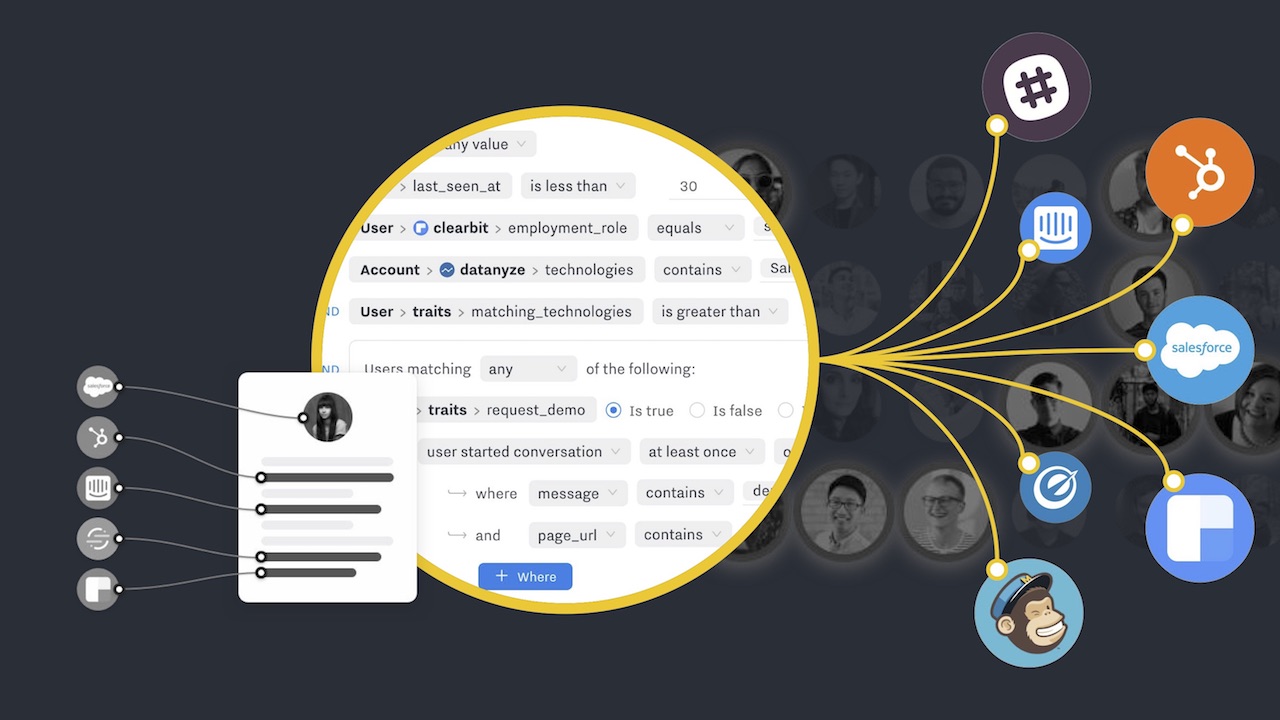

Client data must first be gathered in a single, central location so that it may be prepared for grouping and use in customer segmentation. Sounds simple, but the collection and analysis of data are becoming more difficult due to the proliferation of data sources.

Because of this, maintaining consistent data flow from several data sources to a single server is the first step in effective segmentation. You may have recently heard the term “data synchronisation” or “data sync” used to refer to this operation. It involves setting up system consistency and then performing ongoing updates to keep everything uniform.

Many creative words indicate that it is primarily the responsibility of the engineering team. However, it greatly helps if you are familiar with the fundamental ideas. Here is a summary:

Data synchronisation – In this section, we’ll examine how customer data are stored, the motivations behind people’s desire to move them, and the challenges that digital teams must face in order to do so. The inner workings of how current CRM systems share data over the Internet with APIs are explained in more practical terms in this chapter.

The following section on data integrity and security will explain how to maintain data consistency after synchronisation. We’ll cover the use of schema to ensure data uniqueness and avoid data duplication. Finally, we’ll consider technological data security and privacy issues in light of the fact that they now have first-class status in the era after the GDPR and CCPA.

Finally, we’ll help you become fluent in data crunching by giving you some advice on data filtering and generally making data-driven judgments.

However, CSV has two significant drawbacks. First, hierarchy cannot be represented in a CSV file. You cannot, for instance, demonstrate the relationship between two values. The fact that CSV is a set format is the second biggest flaw. It enables data interchange in accordance with the precise number and kind of columns you specified at the start. An error will be generated during the import attempt if you add a column that is required for another target system or merely remove a column. Developers will have to alter the code to reflect the structural changes in this situation.

This constraint proved to be a significant barrier in light of the dynamics that marketing technology has acquired recently.

frequency of synchronisation

Nowadays, real-time ecommerce solutions are a regular demand. Customers want to know the status of their order, a real-time tracking number for their package, or their account’s current balance. Additionally, marketers want to launch real-time ads that prompt timely buying experiences. They also want to react quickly.

The underlying data storages have real-time synchronisation in place to accomplish that.

There are two issues with real-time synchronisation, though, that should be taken into consideration.

As a general rule, the more real-timeliness you desire, the more expensive it is. This cost manifests itself in the developers’ time but also with the hardware that runs servers (today it’s mostly cloud solutions) you need to keep the data synchronization systems up and running. Therefore, the first thing you should do before telling engineers that you need something “real-time” is to think about the type of data synchronisation frequency you actually require. Perhaps updates issued once an hour or once a day are sufficient to guarantee excellent client experience while minimising the effort of developers and conserving money.

Data synchronisation triggers

Assume we have two application servers prepared to communicate. To be more specific, consider that your email service provider (ESP) has to know how many times a customer has ordered from your online store in order to give them a discount coupon after the tenth purchase. What kind of data flow can we use to make this scenario a reality now? On the ESP side, there are three distinct “triggers” that can initiate the email coupon system.

Data polling: In this instance, ESP is repeatedly requesting the total order amount for Jane Doe from the e-shop API. Every minute, hour, day, etc., it does this. Every time an API request is made, ESP will update the coupon sendout conditions based on the information it has received. If you suspect that calling and processing data in this manner may not be the best course of action, your intuition is correct. What is the substitute?

What if an online store could alert an ESP application the instant Jane placed her tenth order using data pushing? That’s accurate. These drawbacks have been recognised by contemporary e-commerce platforms, who now include similar notifications as part of their feature set. Typically, they are referred to as webhooks or call-outs. It is a straightforward but effective feature. It enables you to choose which programmes should be notified at what times and under what circumstances. You can also choose the type of information that should be included in a notification – occasionally sending whole information is reasonable, but sometimes just a modified property suffices.